Why Retrieval Strategy Changes Everything?

The landscape of AI and natural language processing has been transformed by Retrieval-Augmented Generation (RAG). This powerful framework significantly elevates the quality and factual grounding of large language models (LLMs) by giving them access to external, proprietary knowledge bases—your organization's documents.

RAG’s effectiveness, however, is not static. Its performance is entirely dependent on the question asked and the retrieval strategy employed. A failure to adapt the retrieval approach to the query type can lead to poor results, whether in solving a crime or a complex business problem.

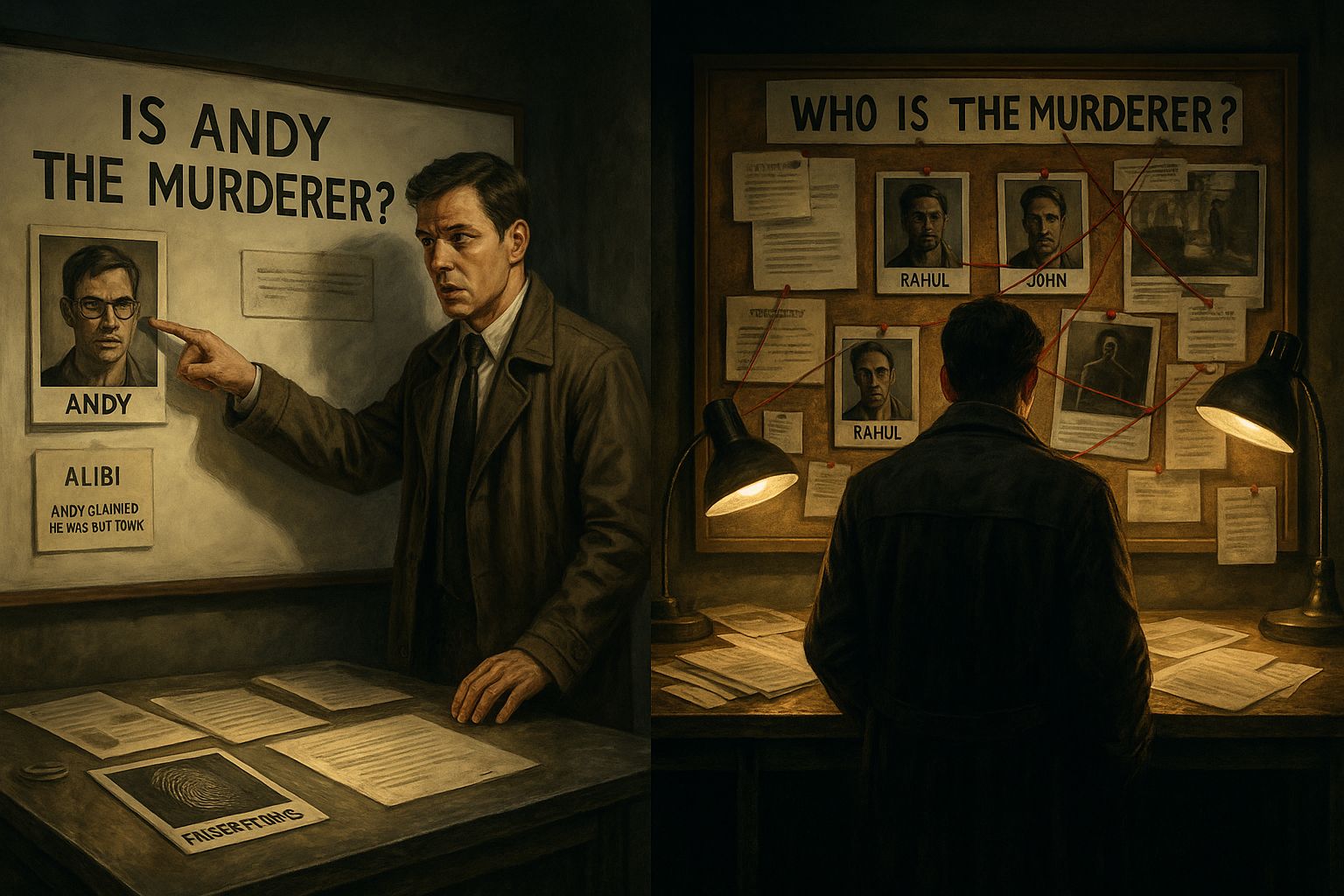

To explain the two fundamental concepts of RAG and demonstrate why retrieval design is paramount, let's turn to a classic whodunit: The Murder at Maple Mansion.

Detective AI and the RAG Assistant

It’s a stormy night, and a murder has occurred at Maple Mansion. Detective AI arrives, accompanied by a powerful assistant: the RAG system. This RAG can instantly query and synthesize information from all the case files, testimonies, and evidence logs stored in the police database.

The way RAG is used and its effectiveness depends entirely on the nature of the query. We can break down the detective’s questions into two primary categories.

When RAG Knows the Answer: Focused Search

This type of query represents a highly focused, specific information retrieval task.

The Question: "Is Andy the murderer?"

The detective isn't exploring; they have a target and need RAG to confirm or deny the suspicion using direct evidence.

What RAG Does (The Focused RAG Process):

Targeted Retrieval: The RAG system uses the query ("Andy") to perform a laser-focused search across the database for documents or snippets that mention Andy specifically in the context of the crime.

Conflicting Evidence: The retriever surfaces key, high-relevance snippets:

- Andy’s fingerprints were found on the murder weapon.

- A verified witness confirms Andy was out of town at the time of the crime.

Snippet Generation: The generator augments the answer by synthesizing only these relevant snippets, generating a response that addresses the suspicion while presenting the alibi.

The RAG Equivalent: This scenario aligns with a RAG setup used for FAQ bots or customer support search. The LLM relies solely on the few, highly targeted documents retrieved to construct a precise, factual answer.

When RAG Must Reason: Exploratory Search

This query represents an open-ended, exploratory search requiring synthesis and cross-referencing across multiple data points.

The Question: "Who is the murderer?"

The detective doesn’t have a name; they need RAG to look at all the facts, connect the dots, and formulate a high-confidence hypothesis.

What RAG Does (The Exploratory RAG Process):

Broad Retrieval: RAG must scan the entire knowledge base interviews, autopsy reports, camera footage logs to find clues pointing to any possible suspects (Andy, Joe, and Arjun). The retriever plays a broader role, gathering a range of possibly useful documents.

Synthesizing Across Documents: The RAG system must now compare and contrast the different pieces of information across various sources:

- It discounts Joe based on a camera log showing him leaving the room before the murder.

- It discounts Andy due to his confirmed alibi.

- It highlights Arjun, who had a clear motive and no alibi.

Reasoned Generation: The generator must now reason across these diverse retrieved pieces of evidence to construct a high-level, reasoned hypothesis that Arjun is the most likely culprit.

The RAG Equivalent: This scenario is equivalent to a RAG system acting as a research or audit assistant. The system must surface and synthesize diverse information from the entire document corpus to construct a coherent, reasoned, and data-driven answer.

The Strategic Impact: Why Retrieval Design Matters

The Maple Mansion mystery reveals a critical insight: the same RAG system performs two completely different functions depending on the query's focus.

The critical takeaway is that a poorly designed retrieval strategy directly results in failed outcomes:

- For Focused Searches: A bad retriever might miss the single document containing Andy’s alibi, leading the LLM to provide a factually incorrect, highly misleading answer.

- For Exploratory Searches: A bad retriever might return too many irrelevant documents, overwhelming the generator and causing it to construct a flimsy or misleading hypothesis due to noise.

At ResEt AI, we deeply understand that the retrieval stage is the most crucial part of RAG. When building enterprise-ready solutions, we design highly specialized retrieval strategies (including hybrid search and re-ranking) to ensure your LLM always has the right evidence whether it’s one critical snippet or a comprehensive set of diverse documents to solve any complex business problem with precision.

Executive Summary

RAG (Retrieval-Augmented Generation) is a revolutionary framework, but its success hinges on its ability to adapt the retrieval strategy to the query type.

- Focused Queries (like "Is Andy the murderer?") require targeted retrieval for specific facts.

- Exploratory Queries (like "Who is the murderer?") require broad retrieval and reasoning across diverse evidence.

Chasing speed and volume without strategic intent leads to flawed results, just as a detective chasing every false lead wastes time. The winning strategy is to design RAG retrieval to match the decision type, ensuring your AI always finds and synthesizes the correct context for the task at hand.